Episerverless = Episerver + Azure Functions

I did talk about this topic couple times solo, and also together with Henrik Fransas in Episerver Partner Close-up in Stockholm this year. Received lot of questions regarding source code availability and also about recordings of the session(s). Unfortunately there are no recordings of the conferences I/we were. So I decided just compile together a blog post.

Objective

Idea for the conference talk(s) was to show how you can integrate Azure Functions with Episerver and how that nicely plays together. There are zillions problems to solve with Azure Functions and also zillions of ways of doing that. However, I’ve chosen pretty straight forward problem to show how you can leverage cloud services and how to integrate those into Episerver solutions.

Problem description: we are building hacker-ish style website where images are not images, but instead converted to ASCII art green on black background. However challenge is with editors - they are quite lazy and almost always refuse to write description of the images. Also, there has been couple of incidents when editors are uploading inappropriate content images. In these cases - administrator of the website has to be notified (notification transport channel is no important - or may change in the future).

For me, as one of the developers of the website, this seems to be perfect candidate for tryout of the Azure Functions and some other cloud services.

So let’s start with laying out components and solution architecture for this fictive website.

Solution Architecture

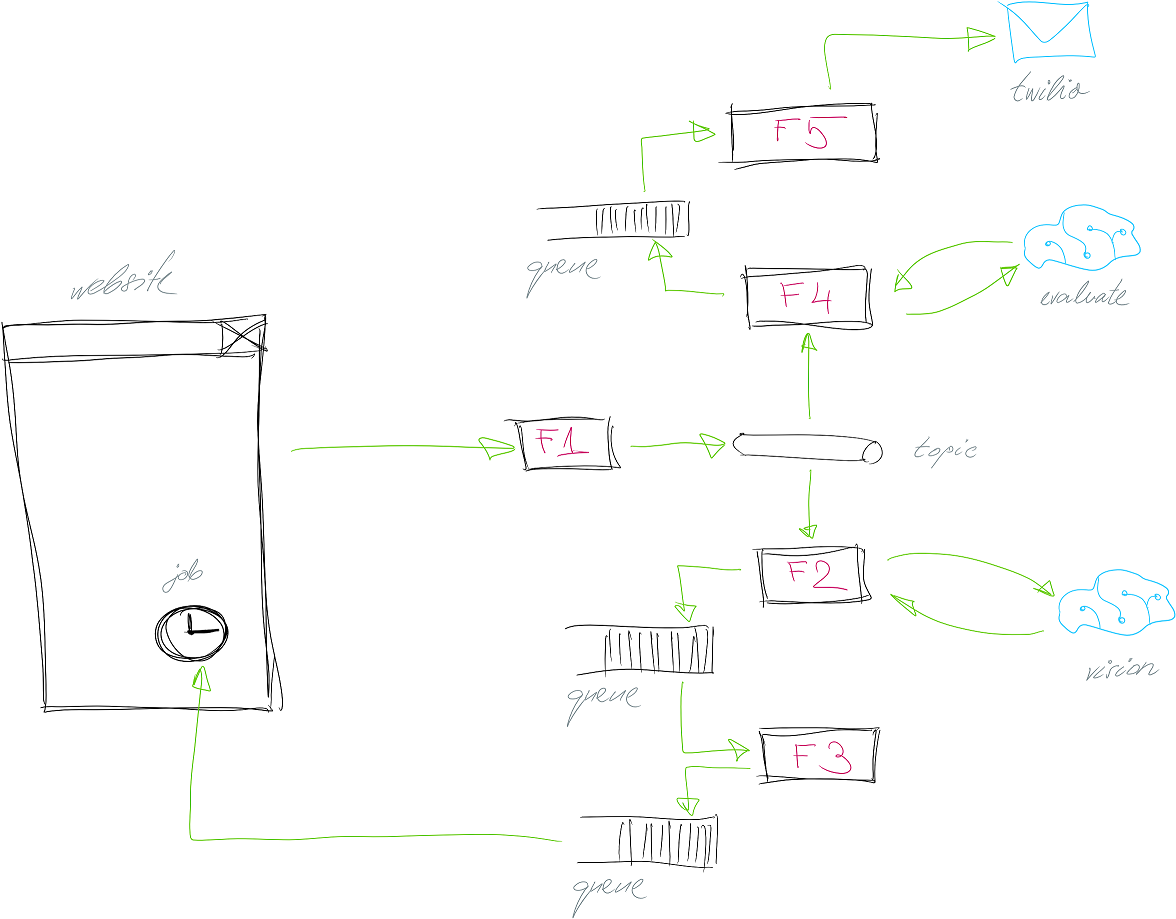

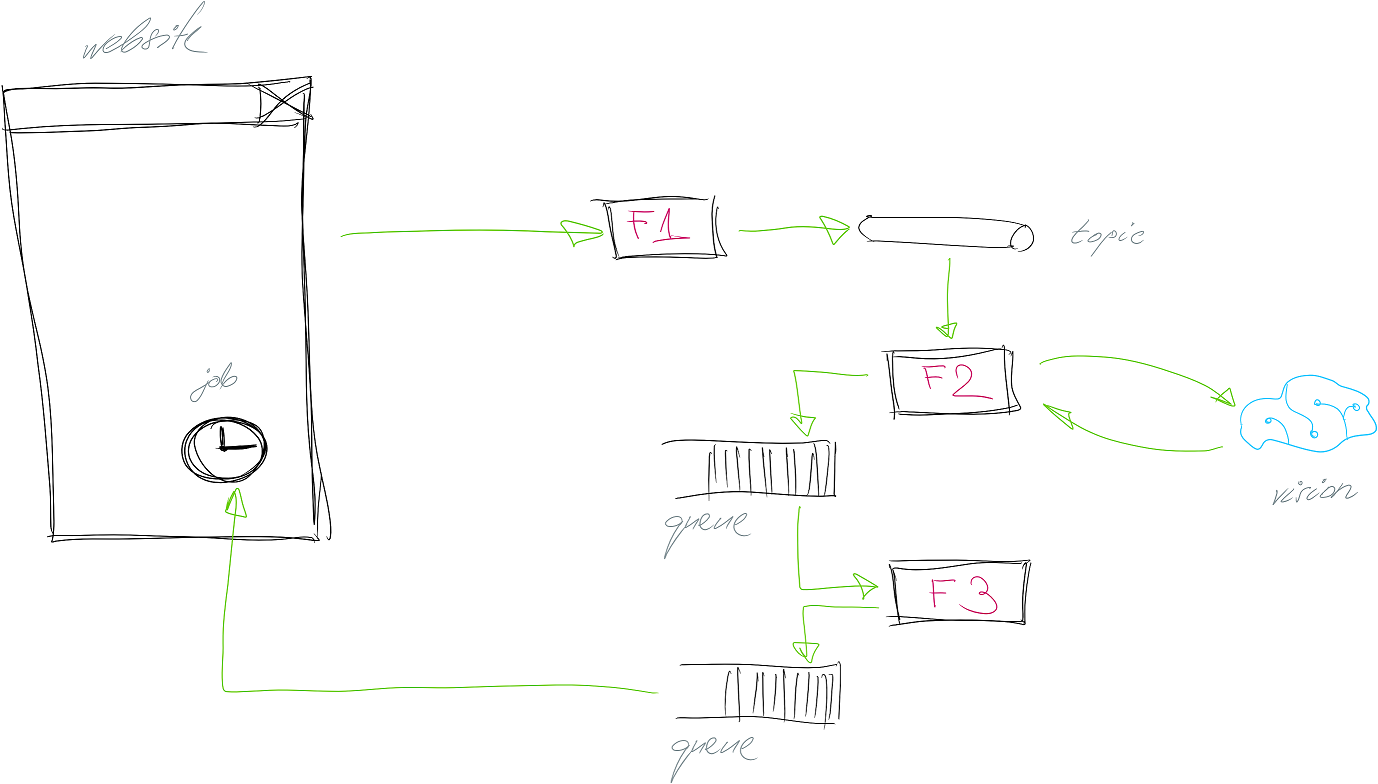

There are 2 main players in the solution: website and cloud services. Website will act as consumer of the cloud services. Azure Functions are ideal candidate for delivering this functionality. Also Azure Cognitive Services will be good stuff when it comes to processing and analysis of images. Notification delivery will be done via Twilio service (which by the way has native support in Azure Functions).

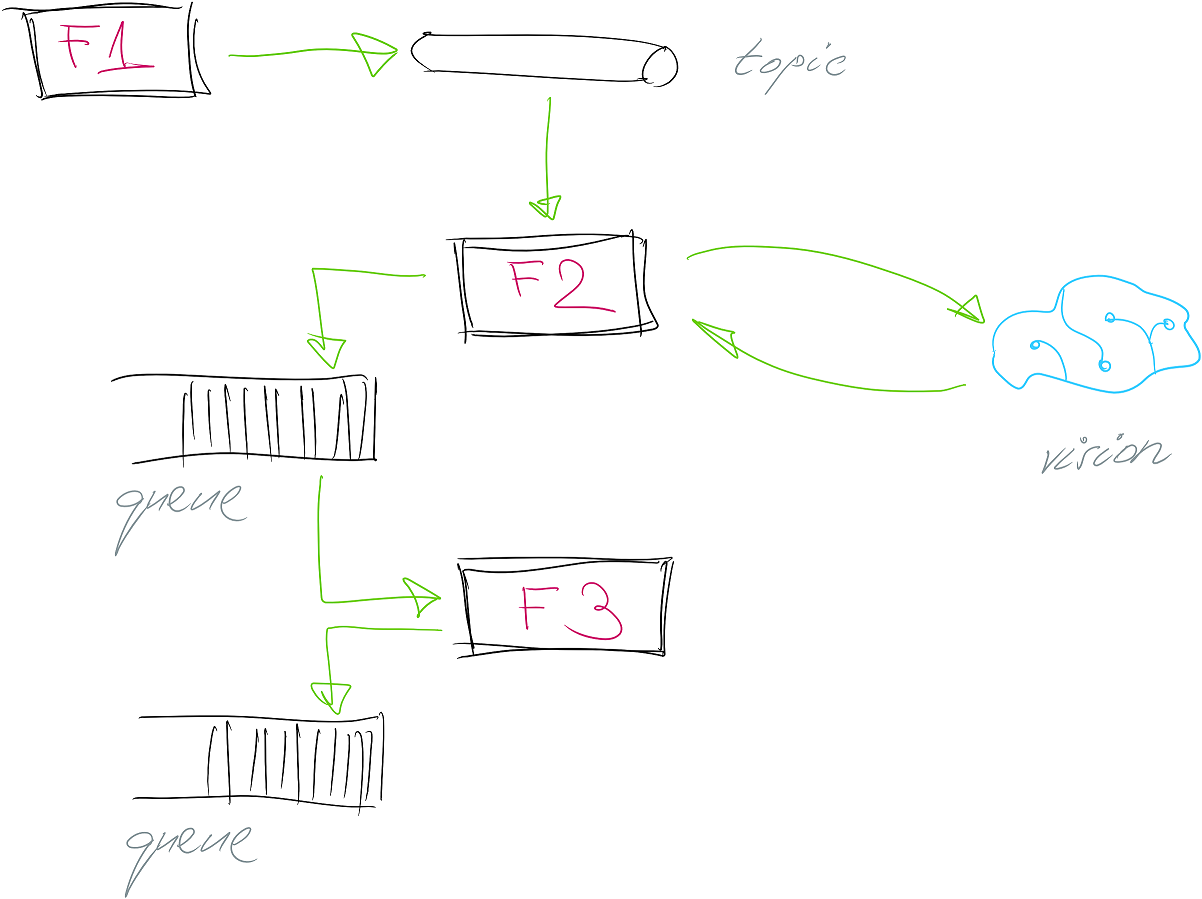

Following functionality could be split into separate functions (remember that function has to do only single thing and not more).

- Functionality 1 - this could be as entrance gate for whole functionality. This component will be responsible for retrieving requests for ASCII art convert and image analysis

- Functionality 2 - this component will be responsible for asking Azure Cognitive Services to analyze image and describe it.

- Functionality 3 - Third link in the chain would be responsible for actually converting image to ASCII art. If we would convert image to ASCII art and then run vision analysis - most probably results would not be very intelligent.

- Functionality 4 - Here we could slide in service responsible for analyzing image for inappropriate content (content moderation as they call it).

- Functionality 5 - if somebody uploaded inadequate image (decision made by Function4) this service would make it possible to send actual notification to the administrator.

Let’s try to draw architecture for our imagined solution:

As you can see - inter-function communication is done either via ServiceBus or Storage Queues. These are most easiest communication channels to use to “stick” together Azure Functions.

ServiceBus topic functionality between function 1 and functions 2 & 4 is necessary because there are multiple “consumers” of the same “signal” - that image has been uploaded for the processing. If using Storage queues there is only single “consumer” of the queue item. Once it’s handled - message disappears from the queue - making it impossible for the other function to pick it up and do stuff also. Therefore most appropriate communication mechanism here would be to use durable pub/sub - Azure ServiceBus topics.

For the content moderator cognitive services (to evaluate content) you will need signup here.

Detailed Description

Below is more detailed description of each of the functions and related components.

Function1 - Accept

First function is acting as facade service for the whole image analysis and processing pipeline. Main purpose (and the only one) for this function is to capture incoming request for the image processing, “register” request and return status code to the caller. Registering request in this context means to create an item in ServiceBus topic. Topic here is required because there will be more than single consumer of the item - Function2 (proceeding with vision analysis of the image) and Function4 (proceeding with content review analysis of the image).

Upload (or call) of the Function1 from the Episerver is straight forward. We subscribe to media upload event in Episerver and then post image byte[] to the Azure Function (I know about ServiceLocator - I’ve been active preaching to avoid this, but there are cases when it’s not entirely possible just because of the underlying framework).

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

[InitializableModule]

[ModuleDependency(typeof(InitializationModule))]

public class EventHandlerInitModule : IInitializableModule

{

private IAsciiArtUploader _uploader;

private UrlHelper _urlHelper;

public void Initialize(InitializationEngine context)

{

var canon = ServiceLocator.Current.GetInstance<IContentEvents>();

_uploader = ServiceLocator.Current.GetInstance<IAsciiArtUploader>();

_urlHelper = ServiceLocator.Current.GetInstance<UrlHelper>();

canon.CreatedContent += OnImageCreated;

}

public void Uninitialize(InitializationEngine context)

{

var canon = ServiceLocator.Current.GetInstance<IContentEvents>();

canon.CreatedContent -= OnImageCreated;

}

private void OnImageCreated(object sender, ContentEventArgs args)

{

if (!(args.Content is ImageData img))

return;

using (var stream = img.BinaryData.OpenRead())

{

var bytes = stream.ReadAllBytes();

_uploader.Upload(img.ContentGuid.ToString(),

bytes,

_urlHelper.ContentUrl(img.ContentLink));

}

}

}

There is also a small helper class that’s dealing with physical file upload:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

internal class AsciiArtUploader : IAsciiArtUploader

{

private readonly IAsciiArtServiceSettingsProvider _settings;

public AsciiArtUploader(IAsciiArtServiceSettingsProvider settings)

{

_settings = settings;

}

public void Upload(string fileId, byte[] bytes, string imageUrl)

{

AsyncHelper.RunSync(() => CallFunctionAsync(fileId, bytes, imageUrl));

}

private async Task<string> CallFunctionAsync(string contentReference,

byte[] byteData,

string imageUrl)

{

var req = new ProcessingRequest

{

FileId = contentReference,

Content = byteData,

Width = 150,

ImageUrl = imageUrl

};

using (var content = new StringContent(JsonConvert.SerializeObject(req)))

{

content.Headers.ContentType = new MediaTypeHeaderValue("application/json");

var response = await

Global.HttpClient.Value.PostAsync(

_settings.Settings.RequestFunctionUri,

content).ConfigureAwait(false);

return await response.Content.ReadAsStringAsync()

.ConfigureAwait(false);

}

}

}

Class IAsciiArtServiceSettingsProvider is just another small helper class that’s providing various settings as it’s not quite good idea to hard-code URL to the functions or any other “dynamic” part of the required settings for the component.

Also, as Episerver event handler is invoked in a sync method, but we need to invoke image upload via async rest client (HttpClient) we need to do some black async magic:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

/// <summary>

/// Credits:

/// http://stackoverflow.com/questions/9343594/how-to-call-asynchronous-method-from-synchronous-method-in-c

/// </summary>

public static class AsyncHelper

{

private static readonly TaskFactory MyTaskFactory =

new TaskFactory(CancellationToken.None,

TaskCreationOptions.None,

TaskContinuationOptions.None,

TaskScheduler.Default);

public static TResult RunSync<TResult>(Func<Task<TResult>> func)

{

return MyTaskFactory.StartNew(func)

.Unwrap()

.GetAwaiter()

.GetResult();

}

public static void RunSync(Func<Task> func)

{

MyTaskFactory.StartNew(func)

.Unwrap()

.GetAwaiter()

.GetResult();

}

}

Note! That you might be using HttpClient in a wrong way.

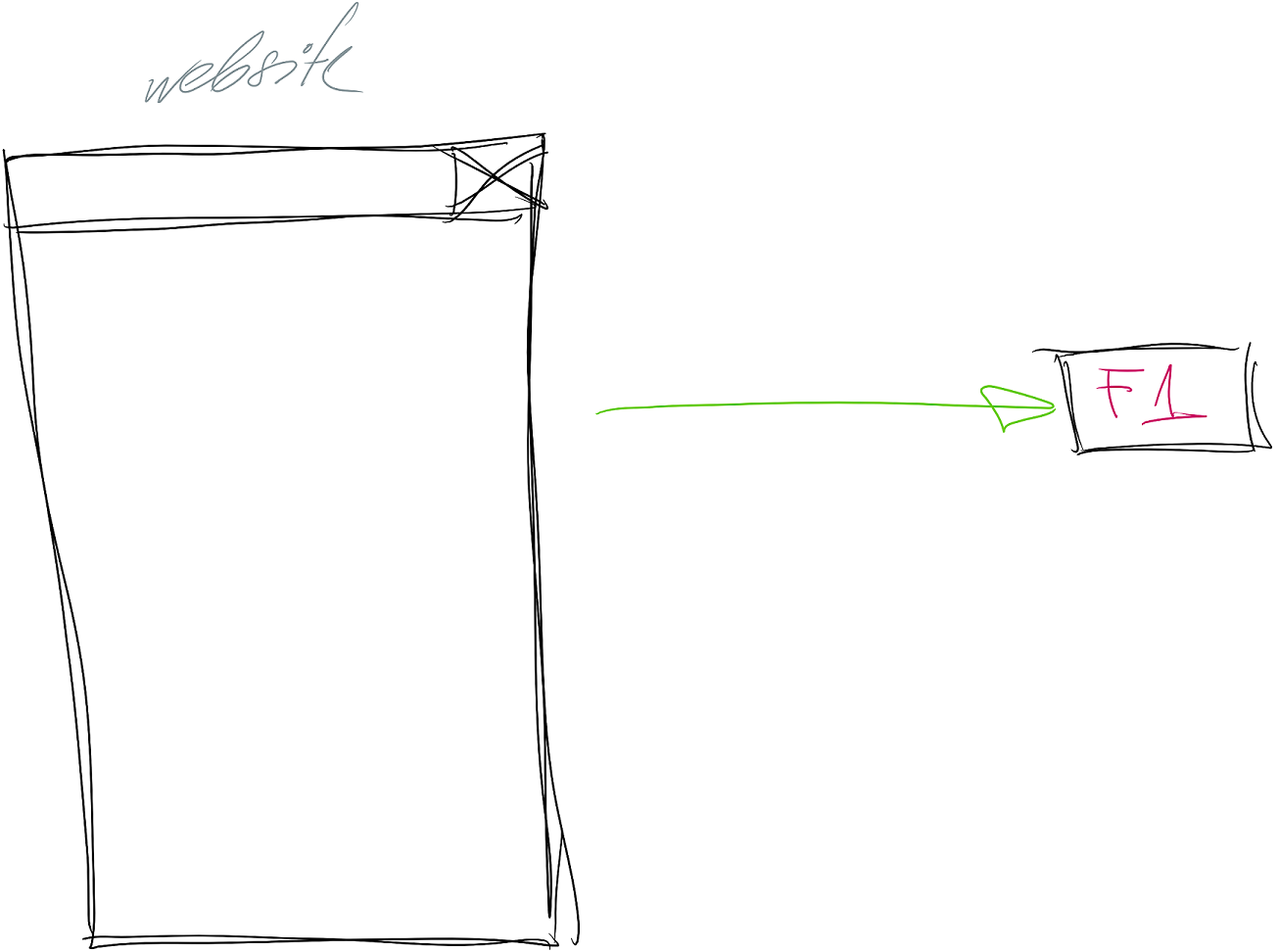

Interaction from the Episerver website to function in our architecture diagram is this particular fragment:

Also function definition is not hard to grasp - the only purpose for the function to exist is to register incoming requests for the image processing, save it to the topic and return Http status code with the reference to saved item.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

[StorageAccount("my-storage-connection")]

[ServiceBusAccount("my-servicebus-connection")]

public static class Function1

{

[FunctionName("Function1")]

public static HttpResponseMessage Run(

[HttpTrigger(AuthorizationLevel.Anonymous, "post")] ProcessingRequest request,

[Blob("%input-container%/{FileId}")] CloudBlockBlob outBlob,

TraceWriter log,

[ServiceBus("mytopic", AccessRights.Manage)] out BrokeredMessage topicMessage)

{

log.Info("(Fun1) Received image for processing...");

AsyncHelper.RunSync(async () =>

{

await outBlob.UploadFromByteArrayAsync(request.Content,

0,

request.Content.Length);

});

var analysisRequest = new AnalysisReq

{

BlobRef = outBlob.Name,

Width = request.Width,

ImageUrl = request.ImageUrl

};

topicMessage = new BrokeredMessage(analysisRequest);

return new HttpResponseMessage(HttpStatusCode.OK)

{

Content = new StringContent(outBlob.Name)

};

}

}

As you can see, creating functions with Visual Studio Azure Functions templates might a bit verbose time to time. There has to be a lot of ceremony with Attribute classes all over the place, but somehow template engine needs to understand what kind of bindings you have for your functions and generate appropriate function.json file at the end. Of course, there is also an option to create (and maintain) this file manually, but I prefer tooling for that. Basically function decorations tells following:

- it’s

HttpTriggered function - meaning that this function is just sitting there and waiting for incoming requests. For the demo purposes this function is not authorized - so anyone can post anything, but in real-life you should definitely secure your functions with invoke keys - it has connection strings to storage (

[StorageAccount]) and servicebus ([ServiceBusAccount]) accounts - it also has a reference to Storage Blob container (well, just because image itself might not be able to store in topic item)

- and an output of the function

BrokeredMessagewill be produced (outparameter) - this will be stored in ServiceBus topic - and as result of the function call

HttpResponseMessagewill be returned to the caller

Interesting to note here is [Blob("%input-container%/{FileId}")] CloudBlockBlob outBlob argument. If we look at incoming Http trigger parameter ProcessingRequest (body of the request):

1

2

3

4

5

6

7

8

9

10

11

12

public class ProcessingRequest

{

public ProcessingRequest()

{

Width = 100;

}

public string FileId { get; set; }

public string ImageUrl { get; set; }

public int Width { get; set; }

public byte[] Content { get; set; }

}

From the Blob attribute argument you can read is as following:

%input-container%- this is reference to the environment variables. You can reference also values fromsettings.jsonfile as environment variables:

1

2

3

4

5

6

7

{

"Values": {

...

"input-container": "in-container",

...

}

}

- However

{FileId}argument is a reference to the property with the same name in incoming request (classProcessingRequest). This technique is very nice and flexible. If you look at how request object was filled up in Episerver - it’s contentGuidof media file in Episerver:

1

2

3

4

5

6

7

8

9

10

11

12

13

private void OnImageCreated(object sender, ContentEventArgs args)

{

if (!(args.Content is ImageData img))

return;

using (var stream = img.BinaryData.OpenRead())

{

var bytes = stream.ReadAllBytes();

_uploader.Upload(img.ContentGuid.ToString(),

bytes,

_urlHelper.ContentUrl(img.ContentLink));

}

}

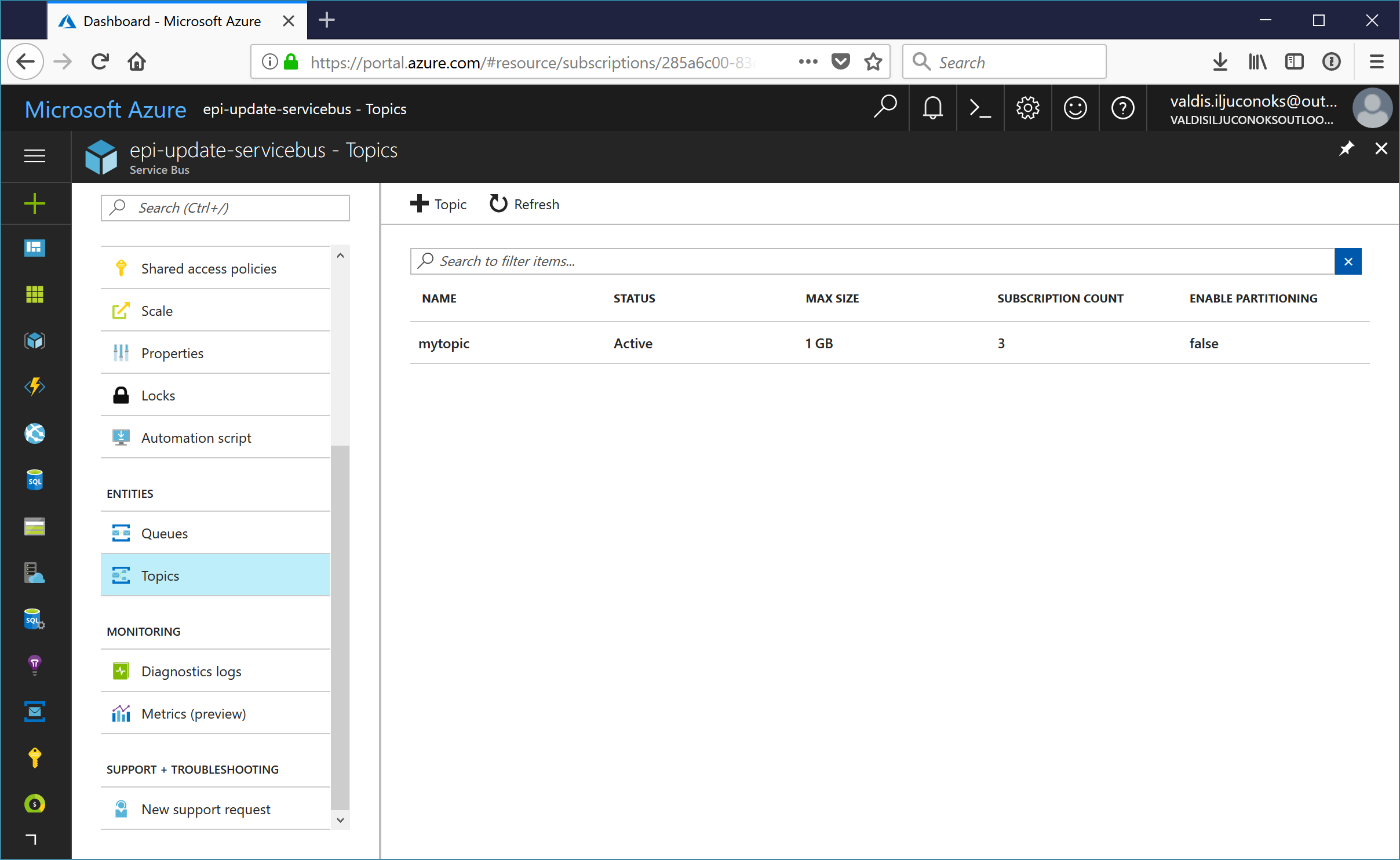

In order for this function to operate there has to be a variable with name my-servicebus-connection in settings.json (or local.settings.json file if you are running locally) that’s pointing to Azure ServicieBus service. And you will need to create new topic named mytopic (or whatever name you choose - just need to match one in decoration of the Function1).

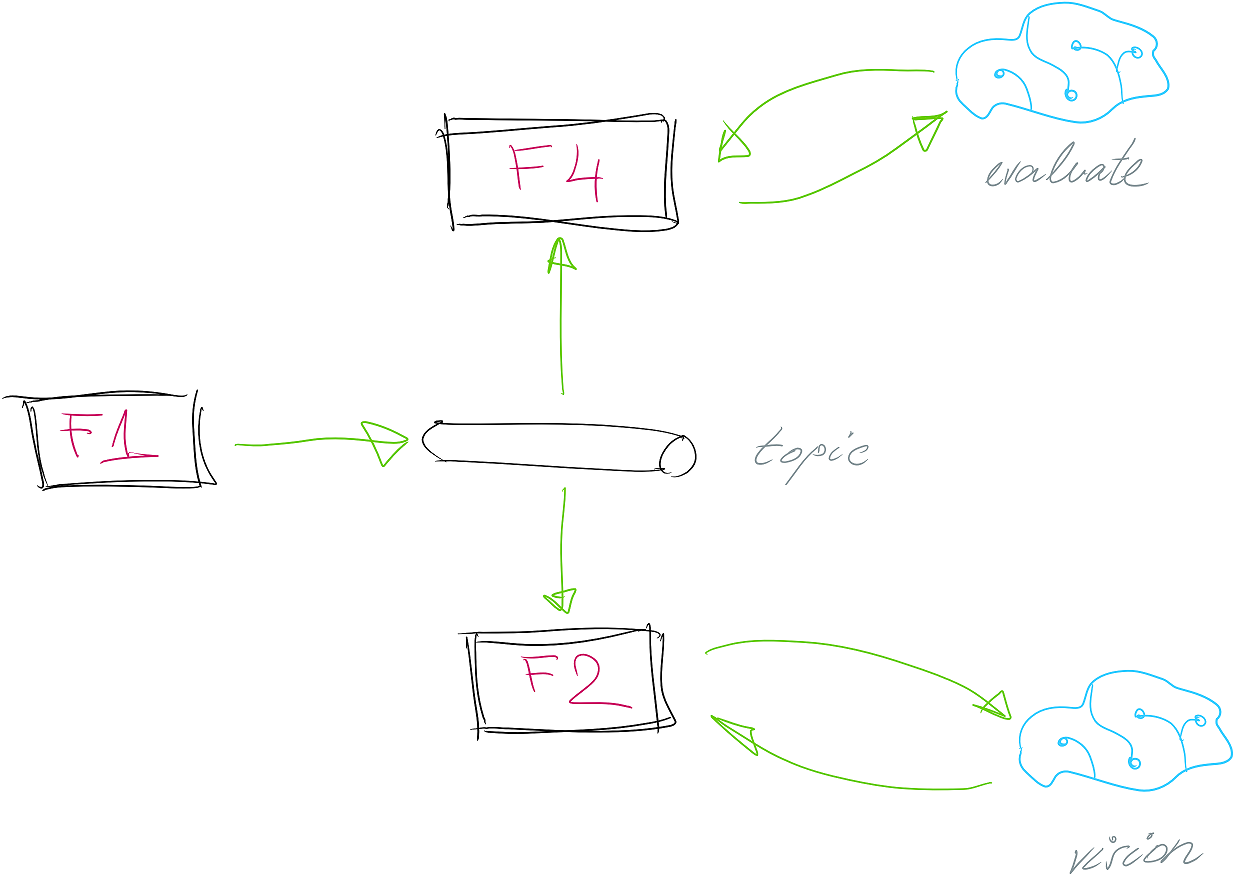

Function2 - Analysis

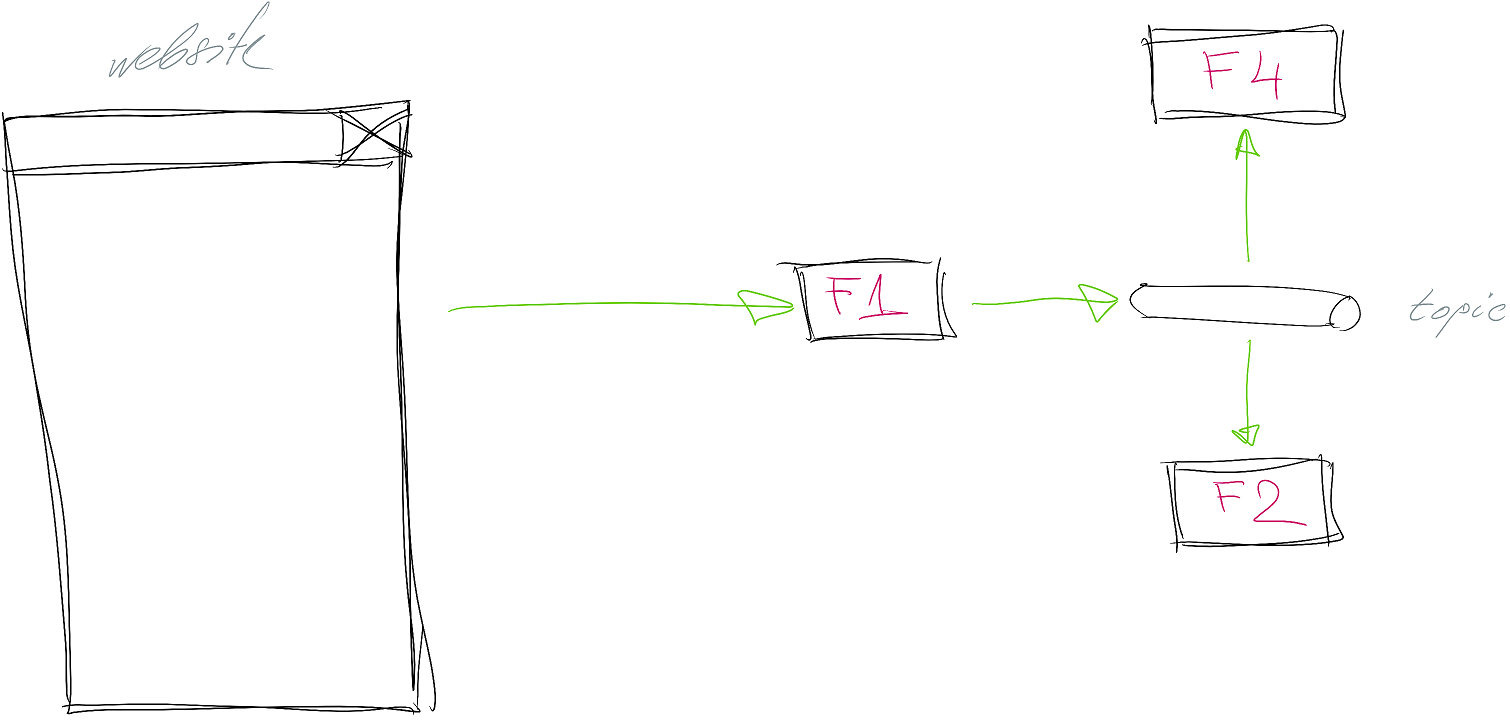

Once incoming request has been accepted and saved in ServiceBus topic - it could processed in parallel - one branch for vision analysis and ASCII art convert, another - for content review.

Function2 is doing vision analysis of the image using Azure Cognitive Services. I was surprised how easy and elegant consumption of these state-of-art services are:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

[StorageAccount("my-storage-connection")]

[ServiceBusAccount("my-servicebus-connection")]

public static class Function2

{

[FunctionName("Function2")]

[return: Queue("to-ascii-conversion")]

public static async Task<CloudQueueMessage> Run(

[ServiceBusTrigger("mytopic", "to-ascii", AccessRights.Manage)] AnalysisReq request,

[Blob("%input-container%/{BlobRef}", FileAccess.Read)] Stream inBlob,

TraceWriter log)

{

log.Info("(Fun2) Running image analysis...");

var subscriptionKey = ConfigurationManager.AppSettings["cognitive-services-key"];

var serviceUri = ConfigurationManager.AppSettings["cognitive-services-uri"];

var client = new VisionServiceClient(subscriptionKey, serviceUri);

var result = await client.AnalyzeImageAsync(inBlob,

new[]

{

VisualFeature.Categories,

VisualFeature.Color,

VisualFeature.Description,

VisualFeature.Faces,

VisualFeature.ImageType,

VisualFeature.Tags

});

var asciiArtRequest = new AsciiArtRequest

{

BlobRef = request.BlobRef,

Width = request.Width,

Description = string.Join(",", result.Description.Captions.Select(c => c.Text)),

Tags = result.Tags.Select(t => t.Name).ToArray()

};

log.Info("(Fun2) Finished image analysis.");

return asciiArtRequest.AsQueueItem();

}

}

So what we can read form the function decorations:

- again Function2 needs access to Storage and ServiceBus (to read incoming topic message) services

- trigger of the function is item in the topic (via

[ServiceBusTrigger]attribute) - also in the same way as Function1 - it’s possible to get reference to Blob block to retrieve actual

byte[]for the image (with help of[Blob("...")]attribute) - return of the function is item in the Storage queue (

returnvalue of the function will be used). Decoration[return: Queue("...")]tells Visual Studio Azure Functions tooling to generate binding for the return value of the function to post to queue namedto-ascii-conversionin storage configured undermy-storage-connectionvariable (insettings.jsonfile). This is will be trigger for Function3.

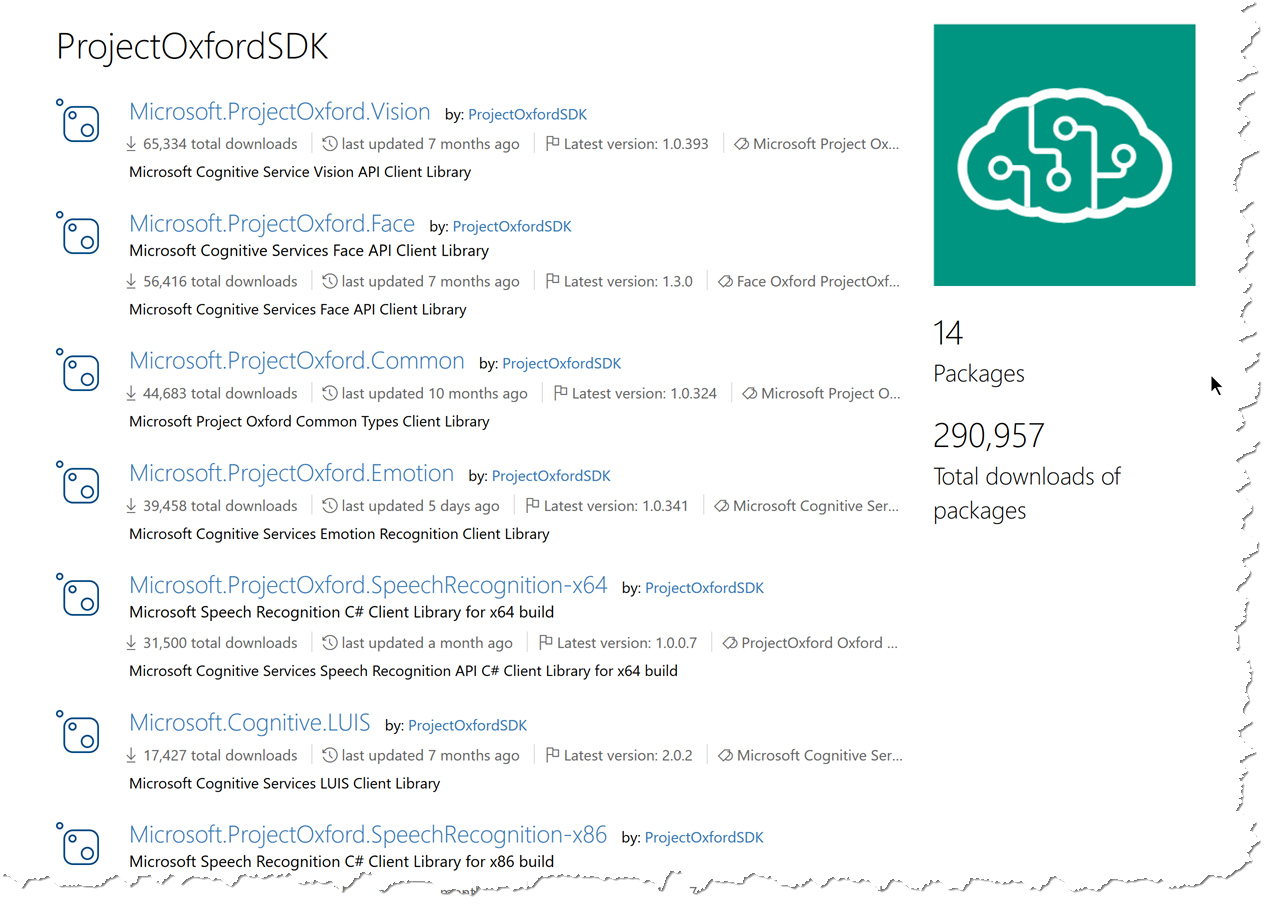

To work with Azure Cognitive Services you will need to install ProjectOxford package:

1

PM> install-package Microsoft.ProjectOxford.Vision

Actual invocation of the Cognitive Services is easy:

1

var result = await client.AnalyzeImageAsync(...);

So having captured all available data from Cognitive Services we take those and combine together within next request for the ASCII art. I find this more flexible as next function in the chain is dependent on previous function (to fetch data from somewhere). Data messages are enriched as they flow through the application leaving no artifacts behind, but absorbing all the necessary data.

Function3 - Convert

When Function2 has finished its product (queue item) is placed in Storage queue upon which next function is triggered. Function3 is responsible for converting image to ASCII art and placing result in next queue (final queue).

I’ll skip actual code for converting to ASCII art (you can actually find lot of interesting implementations on the net), but will post here function itself:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

[StorageAccount("my-storage-connection")]

public static class Function3

{

[FunctionName("Function3")]

[return: Queue("done-images")]

public static async Task<CloudQueueMessage> Run(

[QueueTrigger("to-ascii-conversion")] AsciiArtRequest request,

[Blob("%input-container%/{BlobRef}", FileAccess.Read)] Stream inBlob,

[Blob("%output-container%/{BlobRef}", FileAccess.Write)] Stream outBlob,

TraceWriter log)

{

log.Info("(Fun3) Starting to convert image to ASCII art...");

var convertedImage = ConvertImageToAscii(inBlob, request.Width);

await outBlob.WriteAsync(convertedImage, 0, convertedImage.Length);

var result = new AsciiArtResult(request.BlobRef,

ConfigurationManager.AppSettings["output-container"],

request.Description,

request.Tags);

log.Info("(Fun3) Finished converting image.");

return result.AsQueueItem();

}

}

In this case function needs reference to input blob block (actual image that’s been uploaded in Episerver and analyzed in Cognitive Services) and also reference to output blob - where image will be stored after ASCII conversion. And also result of the function (AsciiArtResult object) will be placed in Storage queue named done-images.

Download to Episerver

Now when image is processed (analyzed and converted) - it’s time to download it back to Episerver. There had been discussions in couple of presentations whether Episerver should download the image or function should “push” processing result to Episerver. I picked solution that function is not doing anything - as just writing down result of processing. “Consuming” side (in this case Episerver) is responsible for getting that image and saving in its own blob storage.

So the easiest way in Episerver to do something periodically (to check for new processing results) is by implementing scheduled jobs.

There is nothing interesting to see in scheduled job itself. I don’t like to keep lot of my stuff physically inside my scheduled job. I tend to think about it as just hosting/scheduling environment for my service. Also separate (read: service isolated from the underlying framework as much as possible) is a bit easier to test.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

[ScheduledPlugIn(DisplayName = "Download AsciiArt Images")]

public class DownloadAsciiArtImagesJob : ScheduledJobBase

{

private readonly IAsciiResponseDownloader _downloader;

public DownloadAsciiArtImagesJob(IAsciiResponseDownloader downloader)

{

_downloader = downloader;

}

public override string Execute()

{

OnStatusChanged($"Starting execution of {GetType()}");

var log = new StringBuilder();

_downloader.Download(log);

return log.ToString();

}

}

Basically job gets the access to storage queue (again, for demo purposes everyone can access that queue, but in real-life I would recommend to handle this with SAS tokens) and checks for any new items in the queue. If there is anything - this means that there is new response from ASCII art processing service app. Almost all the business logic is hidden again in helper services.

Actual downloader of the image:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

internal class CloudQueueAsciiResponseDownloader : IAsciiResponseDownloader

{

private readonly IAsciiArtImageProcessor _processor;

private readonly IAsciiArtServiceSettingsProvider _settingsProvider;

public CloudQueueAsciiResponseDownloader(

IAsciiArtServiceSettingsProvider settingsProvider,

IAsciiArtImageProcessor processor)

{

_settingsProvider = settingsProvider;

_processor = processor;

}

public void Download(StringBuilder log)

{

var settings = _settingsProvider.Settings;

var account = CloudStorageAccount.Parse(settings.StorageUrl);

var queueClient = account.CreateCloudQueueClient();

var queue = queueClient.GetQueueReference(settings.DoneQueueName);

var draftMsg = queue.GetMessage();

if (draftMsg == null)

{

log.AppendLine("No messages found in the queue");

return;

}

while (draftMsg != null)

{

var message = JsonConvert.DeserializeObject<AsciiArtResult>(draftMsg.AsString);

log.AppendLine($"Started processing image ({message.BlobRef})...");

try

{

_processor.SaveAsciiArt(account, message);

queue.DeleteMessage(draftMsg);

log.AppendLine($"Finished image ({message.BlobRef}).");

}

catch (Exception e)

{

log.AppendLine($"Error occoured: {e.Message}");

}

draftMsg = queue.GetMessage();

}

}

}

And once response from the image processing function app is retrieved - we need to save meta data about the image and actual ASCII art as well. This is done in response processor:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

internal class AsciiArtImageProcessor : IAsciiArtImageProcessor

{

private readonly IContentRepository _repository;

public AsciiArtImageProcessor(IContentRepository repository)

{

_repository = repository;

}

public void SaveAsciiArt(CloudStorageAccount account, AsciiArtResult result)

{

var blobClient = account.CreateCloudBlobClient();

var container = blobClient.GetContainerReference(result.Container);

var asciiBlob = container.GetBlobReference(result.BlobRef);

var image = _repository.Get<ImageFile>(Guid.Parse(result.BlobRef));

var writable = image.MakeWritable<ImageFile>();

using (var stream = new MemoryStream())

{

asciiBlob.DownloadToStream(stream);

var asciiArt = Encoding.UTF8.GetString(stream.ToArray());

writable.AsciiArt = asciiArt;

writable.Description = result.Description;

writable.Tags = string.Join(",", result.Tags);

_repository.Save(writable, SaveAction.Publish, AccessLevel.NoAccess);

}

}

}

Now the image vision branch is completed. Let’s jump over to content review branch.

Function4 - Evaluate

For the Function4 to work, you will to signup for the content cognitive services (for now, this is done separately from Cognitive Services setup in Azure). You can signup here.

I was expecting to have the same .Net client API libraries as for image vision services (ProjectOxford). But this is not true for content cognitive services. I’ll explain this more in details later in the post (when talking about targeting frameworks issues).

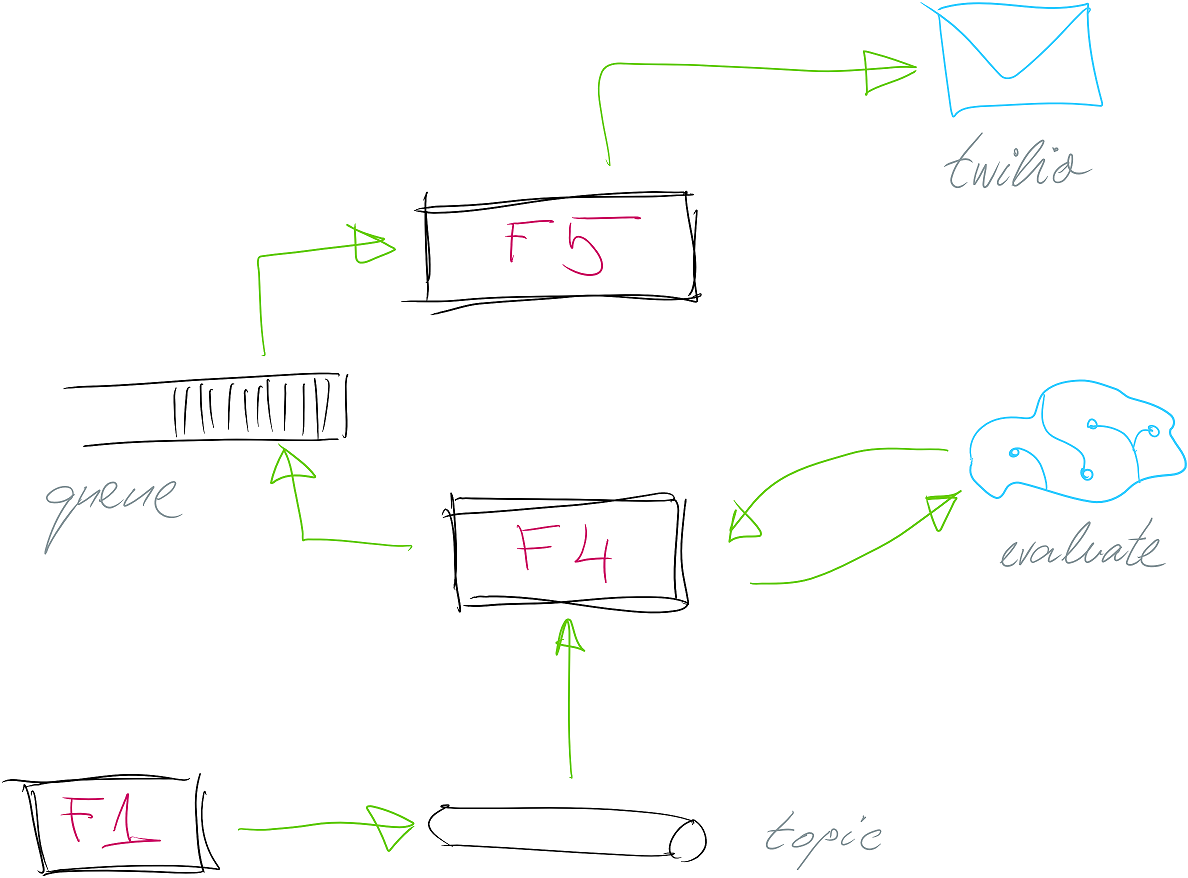

So when somebody uploads image also new message in the topic is emitted. Function4 picks it up (aside from Function2) and starts processing of the content for this image. In particular we are looking for any adult content posted. And if so - administrator should be notified.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

[StorageAccount("my-storage-connection")]

[ServiceBusAccount("my-servicebus-connection")]

public static class Function4

{

[FunctionName("Function4")]

[return: Queue("to-admin-notif")]

public static async Task<CloudQueueMessage> Run(

[ServiceBusTrigger("mytopic", "to-eval", AccessRights.Manage)] AnalysisReq request,

[Blob("%input-container%/{BlobRef}", FileAccess.Read)] Stream inBlob,

TraceWriter log)

{

log.Info("(Fun4) Running image approval analysis...");

try

{

var subscriptionKey =

ConfigurationManager.AppSettings["cognitive-services-approval-key"];

var client = new HttpClient();

// Request headers

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", subscriptionKey);

// Request parameters

var uri = ConfigurationManager.AppSettings["cognitive-services-approval-uri"];

using (var ms = new MemoryStream())

{

inBlob.CopyTo(ms);

var byteArray = ms.ToArray();

Task<string> result;

using (var content = new ByteArrayContent(byteArray))

{

content.Headers.ContentType = new MediaTypeHeaderValue("image/jpeg");

var response = await client.PostAsync(uri, content);

result = response.Content.ReadAsStringAsync();

}

if(result.Result != null)

{

var resultAsObject = JsonConvert.DeserializeObject<ContentModeratorResult>(result.Result);

if(resultAsObject.IsImageAdultClassified)

{

log.Warning("(Fun4) Inappropriate content detected. Sending notification...");

return request.AsQueueItem();

}

}

}

}

catch (Exception e)

{

log.Info("Error on Running image approval..." + e.Message);

}

return null;

}

}

Very similar as for the Function2, this Function4 needs access to original image blob (via blob block reference).

Invocation of the content cognitive service is not so nice as for image vision services, I’ll cover this a bit later in the post. Basically we are creating new REST request to the content cognitive service endpoint and posting image for the analysis:

using (var content = new ByteArrayContent(byteArray))

{

content.Headers.ContentType = new MediaTypeHeaderValue("image/jpeg");

var response = await client.PostAsync(uri, content);

result = response.Content.ReadAsStringAsync();

}

if(result.Result != null)

{

var resultAsObject =

JsonConvert.DeserializeObject<ContentModeratorResult>(result.Result);

if(resultAsObject.IsImageAdultClassified)

{

log.Warning("(Fun4) Inappropriate content detected. Sending notification...");

return request.AsQueueItem();

}

}

And back we get simple type describing results (this is just a projection of whole response):

1

2

3

4

5

6

public class ContentModeratorResult

{

public bool IsImageAdultClassified { get; set; }

public bool IsImageRacyClassified { get; set; }

}

As you can see from the decorations of the function - return value of the function will be used as Storage queue item. So if you don’t want to put anything in the queue - return null.

Function5 - Notify

And the last but not least, Function5 - responsible for delivering notifications to administrator(s) if inappropriate content detected.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

[StorageAccount("my-storage-connection")]

public static class Function5

{

[FunctionName("Function5")]

[return: TwilioSms(AccountSidSetting = "twilio-account-sid",

AuthTokenSetting = "twilio-account-auth-token")]

public static SMSMessage Run(

[QueueTrigger("to-admin-notif")] AnalysisReq request,

TraceWriter log)

{

log.Info("(Fun5) Sending SMS...");

var baseUrl = ConfigurationManager.AppSettings["base-url"];

var from = ConfigurationManager.AppSettings["twilio-from-number"];

var to = ConfigurationManager.AppSettings["twilio-to-number"];

return new SMSMessage

{

From = from,

To = to,

Body = $@"Someone uploaded an non appropriated image to your site.

The image url Id is {request.BlobRef},

url is {baseUrl + request.ImageUrl}"

};

}

}

Again for the demo purpose, to parameter (actual phone number to send SMS to) is hard-coded in settings.json file.

Trigger of the function is Storage queue - however, if there is no item in the queue, this function will not be invoked at all (you will not be charged if running on “consumption pricing plan”).

As you see, there is no interaction with Episerver anymore during content cognitive services branch - if inappropriate content detected - just SMS message is sent.

Challenge with Target Frameworks

Upgrade to .Net Standard 2.0

So why there is challenge using various built-in packages for working with strongly typed bindings and other goodies out of the box? Let’s try to go through this together.

Imagine that we are building simple Azure Function App using Visual Studio Tools for Azure Functions (latest at the moment of writing - 15.0.30923.

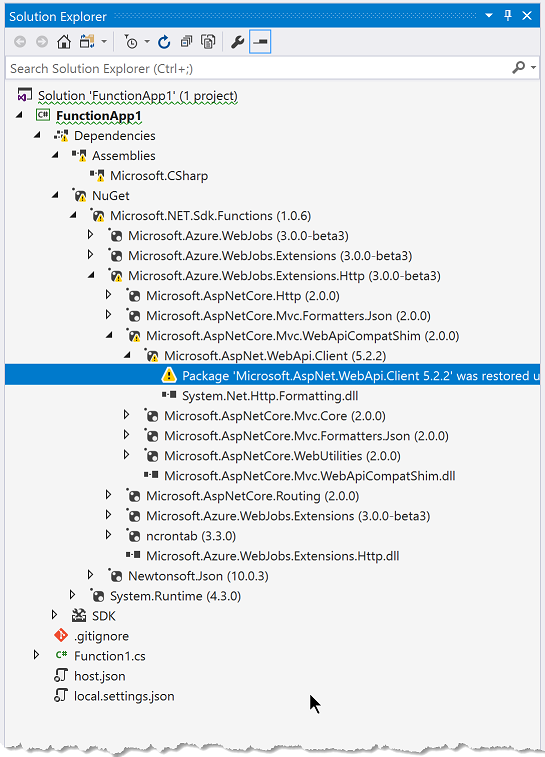

By default with that version of templates Microsoft.NET.Sdk.Functions package is referenced and version is set to 1.0.2. As you might heard, running on .Net Standard in the new thing, so let’s try to migrate there (as VS Tooling has no support yet). We need to upgrade to 1.0.6 of Microsoft.NET.Sdk.Functions package. This is easy. And also need to change target framework in .csproj file to netstandard2.0.

I’m not after proper Nuget package restore getting some dependency errors, but oh well.. yeah… I don’t know even what that dependency is coming from, why it’s needed and most importantly why it’s not restoring for .Net Standard.

1

Package 'Microsoft.AspNet.WebApi.Client 5.2.2' was restored using '.NETFramework,Version=v4.6.1'

Also I see yellow exclamation mark besides that dependency in solution explorer.

Function created in sample app is coming AS-IS from VS Tools for Azure Functions template:

1

2

3

4

5

6

7

8

9

10

public static class Function1

{

[FunctionName("Function1")]

public static void Run(

[TimerTrigger("0 */5 * * * *")] TimerInfo myTimer,

TraceWriter log)

{

log.Info($"C# Timer trigger function executed at: {DateTime.Now}");

}

}

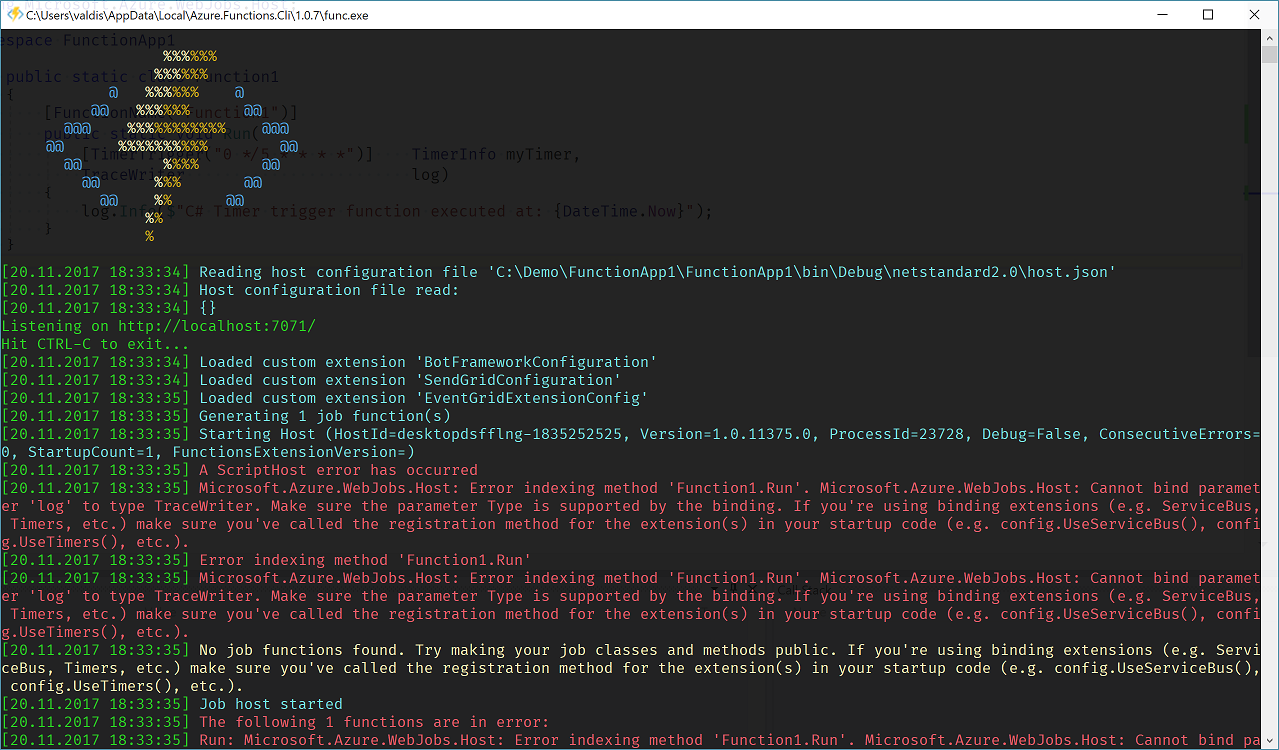

Now running this simple function app targeted against .Net Standard, gives another error:

Should be easy to fix according to this GitHub issue. Running again but this time with ILogger from Microsoft.Extensions.Logging.

1

2

3

4

5

6

7

8

9

10

public static class Function1

{

[FunctionName("Function1")]

public static void Run(

[TimerTrigger("0 */5 * * * *")] TimerInfo myTimer,

ILogger log)

{

log.LogInformation($"C# Timer trigger function executed at: {DateTime.Now}");

}

}

Gives exactly the same error - failing to provide binding value for ILogger interface. There is also some traces on GitHub repo as well, and couple of issues still open (#452 on Azure-Functions or #573 on azure-webjobs-sdk-script).

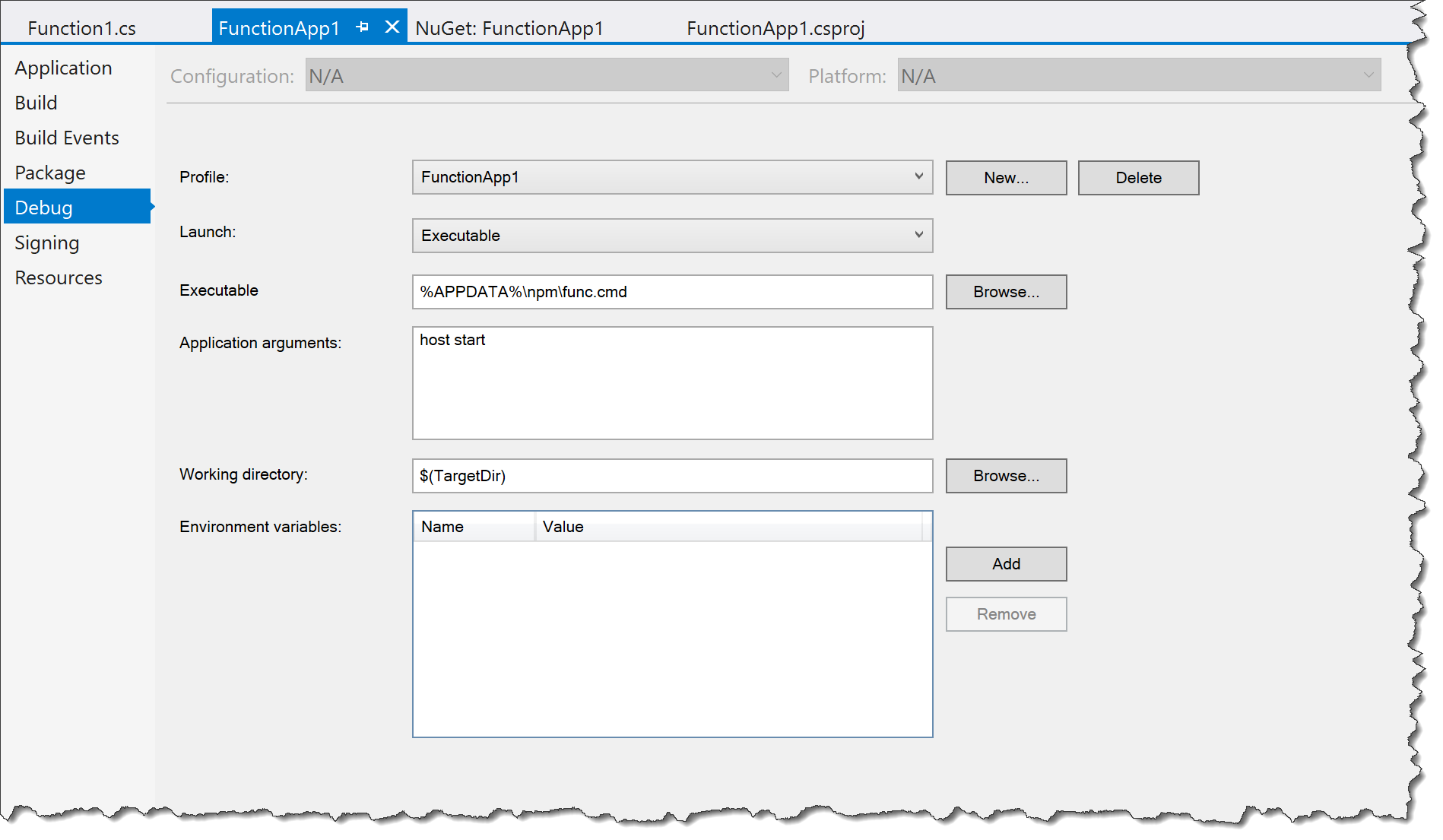

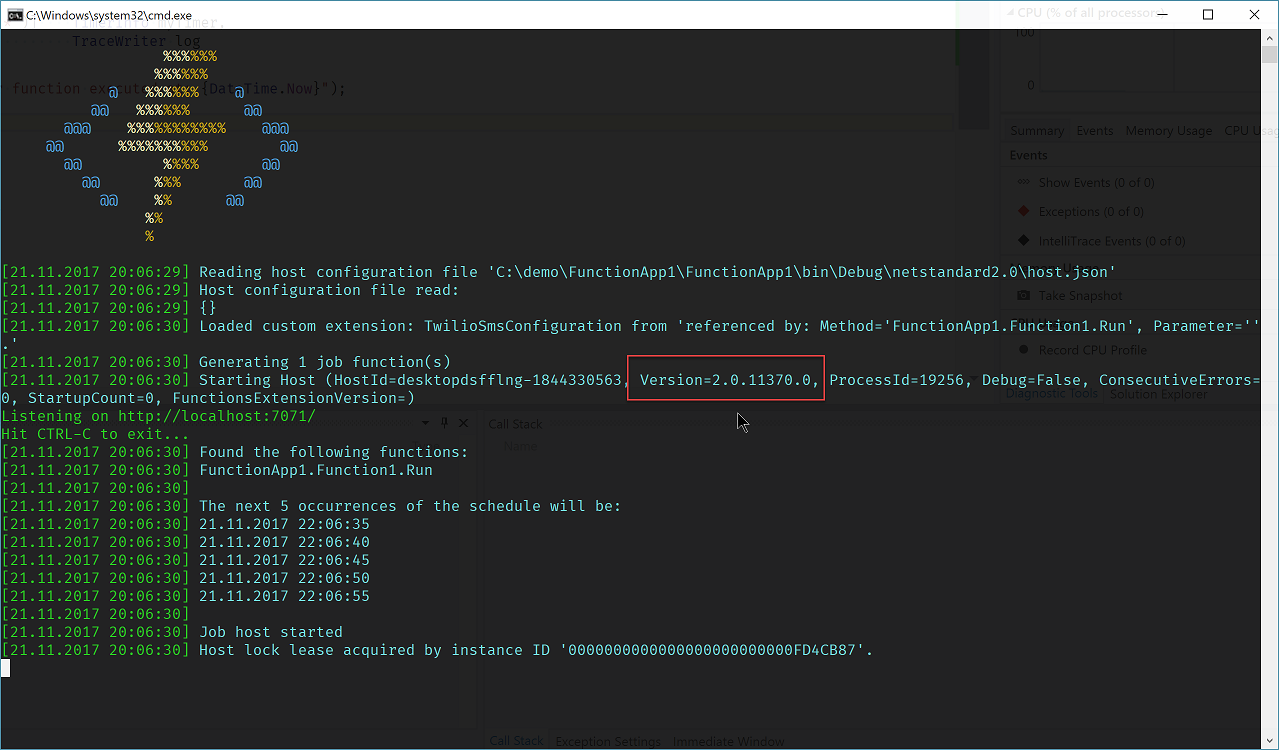

Actually it turned out that we need to download new version of Azure Function SDK and set our project to run under that (that was not obvious at first sight).

Action plan here:

- Download Azure Core tools (node):

1

> npm i -g azure-functions-core-tools@core

- Set project to execute within new runtime:

Now we are able to resolve ILogger at least.

However, local run gave no results in console output. I’m not the only one.

Ok, for the logging - let’s hope it might work at some point.

Now we need to do some Storage and ServiceBus things. With the Storage everything is easy - it’s already part of Microsoft.Azure.WebJobs package (main package for doing Azure Functions - remember that functions are mode on top of webjobs). On the anther hand - to do a ServiceBus thingies - we need separate package - Microsoft.Azure.WebJobs.ServiceBus. For this package last available version is 2.1.0-beta4. Which however requires Microsoft.Azure.WebJobs version 2.1.0-beta4. Which will give following error if you attempt to install:

1

Package 'Microsoft.Azure.WebJobs.ServiceBus 2.1.0-beta4' was restored using '.NETFramework,Version=v4.6.1' instead of the project target framework '.NETStandard,Version=v2.0'. This package may not be fully compatible with your project.

ServiceBus extensions package requires us to fallback to .Net Framework 4.6.1 at least. Ok, also not a problem. We can survive without latest and greatest.

Next thing - we need to talk to Content Cognitive services (preferably via strongly typed client API library and not REST interface). Let’s try to look for this package:

If we look for Content Cognitive Services client API library - there is a unofficial packagehttps://www.nuget.org/packages/Microsoft.ProjectOxford.ContentModerator.DotNetCore/) for that. But it’s targeting .Net Core (v1.1.1).

Not sure whether .Net Core is supported in Azure Functions runtime at all, but we cannot do that because we are constrained with net461 target framework because of ServiceBus extension library. We switch back to .Net Framework (also remember to remove project settings not to run under Azure Runtime 2.0).

So I guess we will need to wait a bit still while all the packages will align and .Net Standard 2.0 will be supported everywhere.

Where to Grab the Stuff?

If you wanna play around with source code - you can fork one here.

If you wanna see original presentation - you can grab one here.

See in action:

Happy functioning!

[eof]

Comments powered by Disqus.